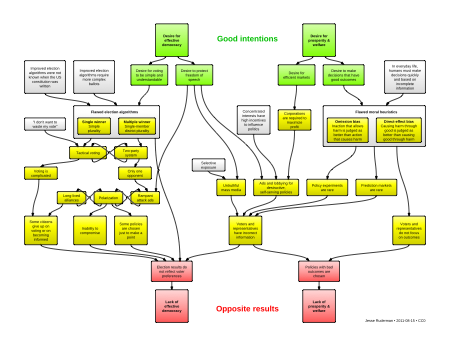

An overview of how democracy can go wrong, even when everyone has good intentions

PNG · PDF · SVG · Fork on LucidChart

Election algorithms

Top left: We should reevaluate our choice of election algorithms.

Using simple plurality causes high levels of tactical voting and strategic nomination, and frequently produces results not desired by the majority.

A familiar example of tactical voting is declining to vote for your favorite third-party candidate, and instead voting for the "lesser of two evils", because you "don't want your vote to be thrown away".

A familiar example of strategic nomination is funding a weak opponent in the hope of splitting your main opponent's vote.

Simple plurality also entrenches two-party systems. This makes attack ads an effective strategy for politicians. The resulting polarization makes reasonable debate and compromise difficult.

I am a fan of instant round robin (Condorcet) methods. I especially like the beatpath (Schulze) method, since its independence of clones property suggests resistance to strategic nomination.

All election methods violate some intuitive criteria (Arrow). And all election methods sometimes admit tactical voting (Gibbard–Satterthwaite). But simple plurality is especially bad, and we should stop using it.

Moral heuristics

Top right: We should reevaluate our moral heuristics, upon realizing that our heuristics are motivated by a desire for (and often fail to create) prosperity, welfare, and happiness.

Moral heuristics serve us well in everyday life, where we are confronted with the need to make decisions quickly and with incomplete information. But the same heuristics can become counterproductive biases when applied to questions of policy. Examples include omission bias, as seen in the trolley-switch problem, and direct-effect bias, seen in the trolley-footbridge problem.

We should take care that we do not come to treat our moral heuristics as ends in and of themselves. (For more on the role of heuristics in consequentialist ethics, I suggest reading Siskind · Baron · Bennis+ · Bazerman+.)

Removing bad information

Top center: We should reevaluate our protections for freedom of speech, upon realizing that our protections are motivated by a desire for (and fail to create) effective democracy.

Protection for freedom of speech is motivated by a desire to ensure governments are not immune from criticism, to keep the powerless from feeling silenced, and to increase access to truth. In some cases, it is not clear that the protected speech furthers any of these goals.

Perhaps freedom of speech should limited in cases where the speaker has wide reach and says things that are demonstrably false, as an expansion of libel law. Or perhaps there should be limits on spending large amounts of money to amplify political speech. Other criteria that might be worth considering are intent to mislead, the speaker's power or incumbency, and whether the medium and timing make it possible to reply.

On the other hand, additional restrictions might not be worth the effort. The undesirable speech would probably become less effective, but not disappear completely. Any ambiguities in the law would create problems for both courts and speakers.

Adding good information

Center right: Perhaps the best way to combat incorrect information is with correct information.

The CFTC should stop discouraging the creation of economic prediction markets. Betting on unemployment, for example, serves legitimate hedging and transparency interests. Political discourse would improve if we had transparent predictions on topics other than the fate of large companies.

Policy experiments should be more common. Just as we require clinical trials for new medications, we should run field experiments for new policies when possible.

More government data should be open. Organizations like MySociety, Code For America, and Wolfram Alpha have done amazing things to help us visualize, interpret, and use the information available so far.

History and technology

Some advances in technology have weakened democracy by amplifying the problems highlighted in the chart.

First, increased interconnectedness is requiring democracies to operate at unprecedented scale. Advances in transportation and communication and warfare impel us to make some important decisions at the US federal level.

Increased distance and heterogeneity tests our individual capacity for empathy and altruism. Large scales magnify the incentives for concentrated interests to attempt to influence policy.

Second, the tools of subversion are improving. Advances in psychology and statistics allow for extremely manipulative advertising. Instant polling shifts focus from outcomes to opinions, and from policy to strategy.

But new tools that could strengthen democracy are also available, if we choose to use them.

Increasingly deep understanding of cognitive biases improves our capacity for reflection. Our experience running financial markets gives us ideas about how to create effective prediction markets.

And crucially, we have computers: computers to implement the voting algorithms invented as part of modern social choice theory, computers to run the advanced statistics that make field experiments reliable, and computers to allow citizens to make creative use of open government data.